Introducing Booksnake: A Scholarly App for Transforming Existing Digitized Archival Materials into Life-Size Virtual Objects for Embodied Interaction in Physical Space, using IIIF and Augmented Reality

INTRODUCTION

Close engagement with primary sources is foundational to humanities research, teaching, and learning 1, 2, 3. We are fortunate to live in an age of digital abundance: Over the past three decades, galleries, libraries, archives, and museums (collectively known as GLAM institutions) have undertaken initiatives to digitize their collection holdings, making millions of primary-source archival materials freely available online and more accessible to more people than ever before 4,5, 67. Increasingly, the world’s myriad cultural heritage materials — architectural plans, books, codices, correspondence, drawings, ephemera, manuscripts, maps, newspapers, paintings, paperwork, periodicals, photographs, postcards, posters, prints, sheet music, sketches, slides, and more — are now only a click away.

But interacting with digitized archival materials in a Web browser can be a frustrating experience — one that fails to replicate the embodied engagement possible during in-person research. As users, we have become resigned to the limitations of Web-based image viewers. How we have to fiddle with Zoom In and Zoom Out buttons to awkwardly jump between fixed levels of magnification. How we must laboriously click and drag, click and drag, over and over, to follow a line of text across a page, or read the length of a newspaper column, or trace a river over a map. How we can’t ever quite tell how big or small something is. We have long accepted these frictions as necessary compromises to quickly and easily view materials online instead of making an inconvenient trip to a physical archive itself — even as we also understand that clicking around in a Web viewer pales in comparison to the rich, fluid, embodied experience of interacting with a physical item in a museum gallery or library reading room.

Booksnake transforms existing digitized archival materials, like this 1909 birds-eye-view map of Los Angeles, into custom virtual objects for embodied exploration in physical space, by using the augmented reality technology in consumer smartphones and tablets. The virtual object remains anchored to the table surface even as the user and device move.

As a step toward moving past these limitations, this research team presents a new kind of image viewer. Booksnake is a scholarly app for iPhones and iPads that enables users to interact with existing digitized archival materials at life size in physical space (see figure 1). Instead of displaying digital images on a flat screen for indirect manipulation, Booksnake uses augmented reality, the process of overlaying a virtual object on physical space 8, to bring digitized items into the physical world for embodied exploration. Existing image viewers display image files as a means of conveying visual information about an archival item. In contrast, Booksnake converts image files into virtual objects in order to create the feeling of being in the item’s presence. To do this, Booksnake automatically transforms digital images of archival materials into custom, size-accurate virtual objects, then dynamically inserts these virtual objects into the live camera view on a smartphone or tablet for interaction in physical space (see figure 2). Booksnake is available as a free app for iPhone and iPad on Apple’s App Store: https://apps.apple.com/us/app/booksnake/id1543247176.

Booksnake’s use of AR makes it feel like a digitized item is physically present in a user’s real-world environment, enabling closer and richer engagement with digitized materials. A Booksnake user aims their phone or tablet at a flat surface (like a table, wall, bed, or floor) and taps the screen to anchor the virtual object to the physical surface. As the user moves, Booksnake uses information from the device’s cameras and sensors to continually adjust the virtual object’s relative position, orientation, and size in the camera view, such that the item appears to remain stationary in physical space as the user and device move. A user thus treats Booksnake like a lens, looking through their device’s screen at a virtual object overlaid on the physical world.

The project takes its name from book snakes, the weighted strings used by archival researchers to hold fragile physical materials in place. Similarly, Booksnake enables users to keep virtual materials in place on physical surfaces in the real world. By virtualizing the experience of embodied interaction, Booksnake makes it possible for people who cannot otherwise visit an archive (due to cost, schedule, distance, disability, or other reasons) to physically engage with digitized materials.

Booksnake represents a proof-of-concept, demonstrating that it is possible to automatically transform existing digital image files of cultural heritage materials into life-size virtual objects on demand. We have designed Booksnake to easily link with existing digitized collections via the International Image Interoperability Framework (or IIIF, pronounced triple-eye-eff), a widely-supported set of open Web protocols for digitally accessing archival materials and related metadata, developed and maintained by the GLAM community 9, 10, 11. The first version of Booksnake uses IIIF to access Library of Congress digitized collections, including the Chronicling America collection of historical newspapers. Our goal is for Booksnake to be able to display any image file accessible through IIIF. At this point, the considerable variability in how different institutions record dimensional metadata means that we will have to customize Booksnake’s links with each digitized collection, as we discuss further below.

Our approach represents a novel use of AR for humanistic research, teaching, and learning. While humanists have begun to explore the potential of immersive technologies — primarily virtual reality (VR) and AR — they have largely approached these technologies as publication platforms: ways of hosting custom-built experiences containing visual, textual, spatial, and/or aural content that is manually created or curated by subject-matter experts. Examples include reconstructions of twelfth-century Angkor Wat 12, a Renaissance studiolo 13, events from the Underground Railroad 14, a medieval Irish castle 15, and an 18th-century Paris theatre 16. These are rich, immersive experiences, but they are typically limited to a specific site, narrative, or set of material. The work involved in manually creating these virtual models can be considerable, lengthy, and expensive.

In contrast, Booksnake is designed as a general-purpose AR tool for archival exploration. Booksnake’s central technical innovation is the automatic transformation of existing digital images of archival cultural heritage materials into dimensionally-accurate virtual objects. We aim to enable users to freely select materials from existing digitized cultural heritage collections for embodied interaction. Unlike custom-built immersive humanities projects, Booksnake makes it possible for a user to create virtual objects easily, quickly, and freely. While Booksnake fundamentally differs from existing Web-based image viewers, it is, like them, an empty frame, waiting for a user to fill it with something interesting.

This article presents a conceptual and technical overview of Booksnake. We first critique the accepted method of viewing digitized archival materials in Web-based image viewers on flat screens and discuss the benefits of embodied interaction with archival materials, then describe results from initial user testing and potential use cases. Next, we contextualize Booksnake, as an AR app for mobile devices, within the broader landscape of immersive technologies for cultural heritage. We then detail the technical pipeline by which Booksnake transforms digitized archival materials into virtual objects for interaction in physical space. Ultimately, Booksnake’s use of augmented reality demonstrates the potential of spatial interfaces and embodied interaction to improve accessibility to archival materials and activate digitized collections, while its presentation as a mobile app is an argument for the untapped potential of mobile devices to support humanities research, teaching, and learning.

Booksnake is designed and built by a multidisciplinary team at the University of Southern California. It represents the combined efforts of humanities scholars, librarians, interactive media designers, and computer scientists — most of them students or early-career scholars — extending over four years.17 The project has been financially supported by the Humanities in a Digital World program (under grants from the Mellon Foundation) in USC’s Dornsife College of Letters, Arts, and Sciences; and by the Ahmanson Lab, a scholarly innovation lab in the Sydney Harman Academy for Polymathic Studies in USC Libraries. The research described in this article was supported by a Digital Humanities Advancement Grant (Level II) from the National Endowment for the Humanities (HAA-287859-22). The project’s next phase will be supported by a second NEH Digital Humanities Advancement Grant (Level II) (HAA-304169-25).

FROM FLAT SCREENS TO EMBODIED EXPLORATION

Flat-screen image viewers have long been hiding in plain sight. Each image viewer sits at the end of an institution’s digitization pipeline and offer an interface through which users can access, view, and interact with digital image files of collection materials. These image viewers’ fundamental characteristic is their transparency: The act of viewing a digital image of an archival item on a flat screen has become so common within contemporary humanities practices that we have unthinkingly naturalized it.

But there is nothing natural about using flat-screen image viewers to apprehend archival materials, because everything about the digitization process is novel. The ability to instantly view a digitized archival item on a computer screen rests on technological developments from the last sixty years: the high-resolution digital cameras that capture archival material; the digital asset management software that organizes it; the cheap and redundant cloud storage that houses it; the high-speed networking infrastructure that delivers it; the Web browsers with which we access it; the high-resolution, full-color screens that display it. Even the concept of a graphical user interface, with its representative icons and mouse-based input, is a modern development, first publicly demonstrated by Douglas Engelbart in 1968 and first commercialized by Apple, with the Lisa and the Macintosh, in the early 1980s 18. Put another way, our now-familiar ways of using a mouse or trackpad to interact with digitized archival materials in a Web-based flat-screen image viewer would be incomprehensibly alien to the people who originally created and used many of these materials — and, in many cases, even to the curators, librarians, and archivists who first acquired and accessioned these items. What does this mean for our relationship with these archival materials?

Despite this, and although Web-based flat-screen image viewers sustain a robust technical development community, they have been largely overlooked by most digital humanists. A notable exception is manuscript scholars, for whom the relationship between text, object, and digital image is particularly important. Indeed, manuscript scholars have led the charge in identifying flat-screen image viewers as sites of knowledge creation and interpretation — often by expressing their frustration with these viewers’ limited affordances or with the contextual information these viewers shear away 19, 20, 21, 22, 23, 24, 25.

To see flat-screen image viewers more clearly, it helps to understand them within the context of archival digitization practices. Digitization is usually understood as the straightforward conversion of physical objects into digital files, but this process is never simple and always involves multiple interpretive decisions.26 As Johanna Drucker writes, “the way artifacts are [digitally] encoded depends on the parameters set for scanning and photography. These already embody interpretation, since the resolution of an image, the conditions of lighting under which it is produced, and other factors, will alter the outcome” 27. Some of these encoding decisions are structured by digitization guidelines, such as the U.S. Federal Agency Digitization Guidelines Initiative 28 standards (2023), while other decisions, such as how to light an item or how to color-correct a digital image, depend on the individual training and judgment of digitization professionals.

A key digitization convention is to render an archival item from an idealized perspective, that of an observer perfectly centered before the item. To achieve this, a photographer typically places the item being digitized perpendicular to the camera’s optical axis, centers the item within the camera’s view, and orthogonally aligns the item with the viewfinder’s edges. During post-processing, a digitization professional can then crop, straighten, and de-skew the resulting image, or stitch together multiple images of a given item into a single cohesive whole. These physical and digital activities produce an observer-independent interpretation of an archival item. Put another way, archival digitization is what Donna Haraway calls a god trick, the act of “seeing everything from nowhere” 29.

Taking these many decisions together, Drucker argues that “ digitization is not representation but interpretation ” 27 (emphasis original). Understanding digitization as a continuous interpretive process, rather than a simple act of representation, helps us see how this process extends past the production of digital image files and into how these files are presented to human users via into traditional flat-screen image viewers.

Flat-screen image viewers encode a set of decisions about how we can (or should) interact with a digitized item. Just as decisions about resolution, lighting, and file types serve to construct a digitized interpretation of a physical object, so too do decisions about interface affordances for an image viewer serve to construct an interpretive space. Following Drucker, image viewers do not simply _represent _ digital image files to a user, they interpret them. Decisions by designers and developers about an image viewer’s interface affordances (how to zoom, turn pages, create annotations, etc.) structure the conditions of possibility for a user’s interactions with a digitized item.

The implications of these decisions are particularly visible when comparing different image viewers. For example, Mirador, a leading IIIF-based image viewer, enables users to compare two images from different repositories side-by-side 30. A different IIIF-based image viewer, OpenSeadragon, is instead optimized for viewing individual “high-resolution zoomable images” 31. Mirador encourages juxtaposition, while OpenSeadragon emphasizes attention to detail. These two viewers each represent a particular set of assumptions, goals, decisions, and compromises, which in turn shape how their respective users encounter and read a given item, the interpretations those users form, and the knowledge they create.

Flat-screen image viewers generally share three attributes that collectively structure a user’s interactions with a digitized item. First, and most importantly, flat-screen image viewers directly reproduce the idealized “view from nowhere” delivered by the digitization pipeline. Flat-screen image viewers could present digital images in any number of ways — upside down, canted at an angle away from the viewer, obscured by a digital curtain. But instead, flat-screen image viewers play the god trick. Second, flat-screen image viewers rely on indirect manipulation via a mouse or trackpad. To zoom, pan, rotate, or otherwise navigate a digitized item, a user must repeatedly click buttons or click and drag, positioning and re-positioning the digital image in order to apprehend its content. These interaction methods create friction between the user and the digitized item, impeding discovery 32. Finally, flat-screen image viewers arbitrarily scale digital images to fit a user’s computer screen. “Digitisation doesn’t make everything equal, it just makes everything the same size,” writes 33. In a flat-screen image viewer, a monumental painting and its postcard reproduction appear to be the same size, giving digitized materials a false homogeneity and disregarding the contextual information conveyed by an item’s physical dimensions. In sum, flat-screen image viewers are observer-independent interfaces for indirect manipulation of arbitrarily scaled digitized materials.

In contrast, Booksnake is an observer-dependent interface for embodied interaction with life-size digitized materials. When we encounter physical items, we do so through our bodies, from our individual point of view. Humans are not simply “two eyeballs attached by stalks to a brain computer,” as Catherine D’Ignazio and Lauren Klein write in their discussion of data visceralization 34. We strain toward the far corners of maps. We pivot back and forth between the pages of newspapers. We curl ourselves over small objects like daguerreotypes, postcards, or brochures. These embodied, situated, perspectival experiences are inherent to our interactions with physical objects. By using augmented reality to pin life-size digitized items to physical surfaces, Booksnake enables and encourages this kind of embodied exploration. With Booksnake, you can move around an item to see it from all sides, step back to see it in its totality, or get in close to focus on fine details. Integral to Booksnake is Haraway’s idea of “the particularity and embodiment of all vision” 29. Put another way, Booksnake lets you break out of the god view and see an object as only you can. As an image viewer with a spatial interface, Booksnake is an argument for a way of seeing that prioritizes embodied interaction with digitized archival materials at real-world size. In this, Booksnake is more than a technical critique of existing flat-screen image viewers. It is also an intellectual critique of how these image viewers foreclose certain types of knowledge creation and interpretation. Booksnake thus offers a new way of looking at digitized materials.

This is an interpretive choice in our design of Booksnake. Again, image viewers do not simply _represent _ digital image files to a user, they interpret them. Booksnake relies on the same digital image files as do flat-screen image viewers, and these files are just as mediated in Booksnake as they are when viewed in a flat-screen viewer. ( “There is no unmediated photograph,” Haraway writes 29. Indeed, Booksnake even displays these image files on the flat screen of a smartphone or tablet — but its use of AR creates the illusion that the object is physically present. Where existing flat-screen image viewers foreground the digital-ness of digitized objects, Booksnake instead recovers and foregrounds their object-ness, their materiality. Drucker writes that “information spaces drawn from a point of view, rather than as if they were observer independent, reinsert the subjective standpoint of their creation” 35. Drucker was writing about data visualization, but her point holds for image viewers (which, after all, represent a kind of data visualization). Our design decisions aim to create an interpretive space grounded in individual perspective, with the goal of helping a Booksnake user get closer to the “subjective standpoint” of an archival item’s original creators and users. Even as the technology underpinning Booksnake is radically new, it enables methods of embodied looking that are very old, closely resembling in form and substance physical, pre-digitized ways of looking.

INITIAL TESTING RESULTS AND USE CASES

Booksnake’s emphasis on embodied interaction gives it particular potential as a tool for humanities education. Embodied interaction is a means of accessing situated knowledges 29 and is key to apprehending cultural heritage materials in their full complexity. As museum scholars and educators have demonstrated, this is true both for physical objects 36 and for virtual replicas 37. Meanwhile, systematic reviews show AR can support student learning gains, motivation, and knowledge transfer 38. By using AR to make embodied interaction possible with digitized items, Booksnake supports student learning through movement, perspective, and scale. For example, a student could use Booksnake to physically follow an explorer’s track across a map, watch a painting’s details emerge as she moves closer, or investigate the relationship between a poster’s size and its message — interactions impossible with a flat-screen viewer. Here, we briefly highlight themes that have emerged in user and classroom testing, then discuss potential use cases.

A common theme in classroom testing was that Booksnake’s presentation of life-size virtual objects made students feel like they were closer to digitized sources. A colleague’s students used Booksnake as part of an in-class exercise to explore colonial-era Mesoamerican maps and codices, searching for examples of cultural syncretism. In a post-activity survey, students repeatedly described a feeling of presence. Booksnake gave one student “the feeling of actually seeing the real thing up close.” Another student wrote that “I felt that I was actually working with the codex.” A third wrote that “it was cool to see the resource, something I will probably never get to flip through, and get to flip through and examine it.” Students also commented on how Booksnake represented these items’ size. One student wrote that Booksnake “gave me the opportunity to get a better idea of the scale of the pieces.” Another wrote that “I liked being able to see the material physically and see the scale of the drawings on the page to see what they emphasized and how they took up space.” These comments suggest that Booksnake has the most potential to support embodied learning activities that ask students to engage with an item’s physical features (such as an object’s size or proportions), or with the relationship between these features and the item’s textual and visual content.

During one-on-one user testing sessions, two history Ph.D. students each separately described feeling closer to digitized sources. One tester described Booksnake as opening “a middle ground” between digital and physical documents by offering the “flexibility” of digital materials, but the “sensorial experience of closeness” with archival documents. The other tester said that Booksnake brought “emotional value” to archival materials. “Being able to stand up and lean over it [the object] brought it to life a little more,” this tester said. “You can’t assign research utility to that, but it was more immersive and interactive, and in a way satisfying.” Their comments suggest that Booksnake can enrich engagement with digitized materials, especially by producing the feeling of physical presence.

As a virtualization technology, Booksnake makes it possible to present archival materials in new contexts, beyond the physical site of the archive itself. Jeremy Bailenson argues that virtualization technologies are especially effective for scenarios that would otherwise be rare, impractical, destructive, or expensive 39. Here, we use these four characteristics to describe potential Booksnake use cases. First, it is rare to have an individual, embodied interaction with one-of-a-kind materials, especially for people who do not work at GLAM institutions. A key theme in student comments from the classroom testing described above, for example, was that Booksnake gave students a feeling of direct engagement with unique Mesoamerican codices. Second, it is often logistically impractical for a class to travel to an archive (especially for larger classes) or to bring archival materials into classrooms outside of library or museum buildings. The class described above, for example, had around eighty students, and Booksnake made it possible for each student to individually interact with these codices, during scheduled class time and in their existing classrooms. Third, the physical fragility and the rarity or uniqueness of many archival materials typically limits who can handle them or where they can be examined. Booksnake makes it possible to engage with virtual archival materials in ways that would cause damage to physical originals. For example, third-grade students could use Booksnake to explore a historic painting by walking atop a virtual copy placed on their classroom floor, or architectural historians could use Booksnake to bring virtual archival blueprints into a physical site. Finally, it is expensive (in both money and time) to physically bring together archival materials that are held by two different institutions. With Booksnake, a researcher could juxtapose digitized items held by one institution with physical items held by a different institution, for purposes of comparison (such as comparing a preparatory sketch to a finished painting) or reconstruction (such as reuniting manuscript pages that had been separated). In each of these scenarios, Booksnake’s ability to produce virtual replicas of physical objects lowers barriers to embodied engagement with archival materials and opens new possibilities for research, teaching, and learning.

IMMERSIVE TECHNOLOGIES FOR CULTURAL HERITAGE

Booksnake is an empty frame. It leverages AR to extend the exploratory freedom that users associate with browsing online collections into physical space. In doing so, Booksnake joins a small collection of digital tools and projects using immersive technologies as the basis for interacting with cultural heritage materials and collections.

A dedicated and technically adept user could use 3D modeling software (such as Blender, Unity, Maya, or Reality Composer) to manually transform digital images into virtual objects. This can be done with any digital image, but is time-intensive and breaks links between images and metadata. Booksnake automates this process, making it more widely accessible, and preserves metadata, supporting humanistic inquiry.

The Google Arts & Culture app offers the most comparable use of humanistic AR. Like Booksnake, Arts & Culture is a mobile app for smartphones and tablets that offers AR as an interface for digitized materials. A user can activate the app’s “Art Projector” feature to “display life-size artworks, wherever you are” by placing a digitized artwork in their physical surroundings 40. But there are three key differences between Google’s app and Booksnake. First, AR is one of many possible interaction methods in Google’s app, which is crowded with stories, exhibits, videos, games, and interactive experiences. In contrast, Booksnake emphasizes AR as its primary interface, foregrounding the embodied experience. Second, Google’s app focuses on visual art (such as paintings and photographs), while Booksnake can display a broader range of archival and cultural heritage materials, making it more useful for humanities scholars. (Booksnake can also display paginated materials, as we discuss further below, while Google’s app cannot.) Finally — and most importantly — Google’s app relies on a centralized database model. Google requires participating institutions to upload their collection images and metadata to Google’s own servers, so that Google can format and serve these materials to users 41. In contrast, Booksnake’s use of IIIF enables institutions to retain control over their digitized collections and expands the capabilities of an open humanities software ecosystem.

Another set of projects approach immersive technologies as tools for designing and delivering exhibition content. Some use immersive technologies to enhance physical exhibits, such as Veholder, a project exploring technologies and methods for juxtaposing 3D virtual and physical objects in museum settings 42, 43. Others are tools for using immersive technologies to create entirely virtual spaces. Before its discontinuation, many GLAM institutions and artists adapted Mozilla Hubs, a general-purpose tool for building 3D virtual spaces that could be accessed using a flat-screen Web browser or a VR headset, to build virtual exhibition spaces, although users were required to manually import digitized materials and construct virtual replicas 44. Another project, Diomira Galleries, is a prototype tool for building VR exhibition spaces with IIIF-compliant resources 45. Like Booksnake, Diomira uses IIIF to import digital images of archival materials, but Diomira arbitrarily scales these images onto template canvases that do not correspond to an item’s physical dimensions. As with Booksnake, these projects demonstrate the potential of immersive technologies for new research interactions and collection activation with digitized archival materials.

Finally, we are building Booksnake as an AR application for existing consumer mobile devices as a way of lowering barriers to immersive engagement with cultural heritage materials. Most VR projects require expensive special-purpose headsets, which has limited adoption and access 46. In contrast, AR-capable smartphones are ubiquitous, enabling a mobile app to tap the potential of a large existing user base, and positioning such an app to potentially mitigate racial and socioeconomic digital divides in the United States. More Americans own smartphones than own laptop or desktop computers 47. And while Black and Hispanic adults in the United States are less likely to own a laptop or desktop computer than white adults, Pew researchers have found “no statistically significant racial and ethnic differences when it comes to smartphone or tablet ownership” 48. Similarly, Americans with lower household incomes are more likely to rely on smartphones for Internet access 49. Smartphones are thus a key digital platform for engaging and including the largest and most diverse audience. Developing an Android version of Booksnake will enable us to more fully deliver on this potential.

AUTOMATICALLY TRANSFORMING DIGITAL IMAGES INTO VIRTUAL OBJECTS

Booksnake’s central technical innovation is automatically transforming existing digital images of archival cultural heritage materials into dimensionally-accurate virtual objects. To make this possible, Booksnake connects existing software frameworks in a new way.

First, Booksnake uses IIIF to download images and metadata.50 IIIF was proposed in 2011 and developed over the early 2010s. Today, the IIIF Consortium is composed of sixty-five global GLAM institutions, from the British Library to Yale University 51, while dozens more institutions offer access to their collections through IIIF because several common digital asset management (DAM) platforms, including CONTENTdm, LUNA, and Orange DAM, support IIIF 52 53 54. This widespread use of IIIF means that Booksnake is readily compatible with many existing digitized collections. By using IIIF, Booksnake embraces and extends the capabilities of a robust humanities software ecosystem. By demonstrating a novel method to transform existing IIIF-compliant resources for interaction in augmented reality, we hope that Booksnake will drive wider IIIF adoption and standardization.

Next, Booksnake uses a pair of Apple software frameworks, ARKit and RealityKit. ARKit, introduced in 2017, interprets and synthesizes data from an iPhone or iPad’s cameras and sensors to understand a user’s physical surroundings and to anchor virtual objects to horizontal and vertical surfaces 55. RealityKit, introduced in 2019, is a framework for rendering and displaying virtual objects, as well as managing a user’s interactions with them (for example, by interpreting a user’s on-screen touch gestures) 56. Both ARKit and RealityKit are built into the device operating system, enabling us to rely on these frameworks to create virtual objects and to initiate and manage AR sessions.

Developing Booksnake as a native mobile app, rather than a Web-based tool, makes it possible for Booksnake to take advantage of the powerful camera and sensor technologies in mobile devices. We are developing Booksnake’s first version for iPhone and iPad because Apple’s tight integration of hardware and software supports rapid AR development and ensures consistency in user experience across devices. We plan to extend our work by next developing an Android version of Booksnake, improving accessibility. Another development approach, WebXR, a cross-platform Web-based AR/VR framework currently in development, lacks the features to support our project goals.

Booksnake thus links IIIF with RealityKit and ARKit to produce a novel result: an on-demand pipeline for automatically transforming existing digital images of archival materials into custom virtual objects that replicate the physical original’s real-world proportions, dimensions, and appearance, as well as an AR interface for interacting with these virtual objects in physical space. (See figure 3.) How does Booksnake do this?

Booksnake starts with the most humble of Internet components: the URL. A Booksnake user first searches and browses an institution’s online catalog through an in-app Web view. Booksnake offers an Add button on catalog pages for individual items. When the user taps this button to add an item to their Booksnake library, Booksnake retrieves the item page’s URL. Because of Apple’s privacy restrictions and application sandboxing, this is the only information that Booksnake can read from a given Web page; it cannot directly access content on the page itself. Instead, Booksnake translates the item page URL into the corresponding IIIF manifest URL.

An IIIF manifest is a JSON file — a highly structured, computer-readable text file — that contains a version of the item’s catalog record, including metadata and URLs for associated images. The exact URL translation process varies depending on how an institution has implemented IIIF, but in many cases it is as simple as appending "/manifest.json" to the item URL. For example, the item URL for the Library of Congress’s 1858 “Chart of the submarine Atlantic Telegraph,” is https://www.loc.gov/item/2013593216/, and the item’s IIIF manifest URL is https://www.loc.gov/item/2013593216/manifest.json. In other cases, Booksnake may extract a unique item identifier from the item URL, then use that unique identifier to construct the appropriate IIIF manifest URL. Booksnake then downloads and parses the item’s IIIF manifest.

First, Booksnake extracts item metadata from the IIIF manifest. Booksnake uses this metadata to construct an item page in the app’s Library tab, enabling a user to view much of the same item-level metadata visible in the host institution’s online catalog. An IIIF manifest presents metadata in key-value pairs, with each pair containing a general label (or key) and a corresponding entry (or value). For example, the IIIF manifest for the 1858 Atlantic telegraph map mentioned above contains the key Contributors, representing the catalog-level field listing an item’s authors or creators, and the corresponding item-level value “Barker, Wm. J. (William J.) (Surveyor),” identifying the creator of this specific item. Importantly, while the key-value pair structure is generally consistent across IIIF manifests from different institutions, the key names themselves are not. The “Contributors” key at one institution may be named Creators at another institution, and Authors at a third. The current version of Booksnake simply displays the key-value metadata as provided in the IIIF manifest. As Booksnake adds support for additional institutions, we plan to identify and link different keys representing the same metadata categories (such as Contributors, Creators, and Authors). This will enable users, for example, to sort items from different institutions by common categories like Author or Date created, or to search within a common category.

Second, Booksnake uses image URLs contained in the IIIF manifest to download the digital images (typically JPEG or JPEG 2000 files) associated with an item’s catalog record. Helpfully, IIIF image URLs are structured so that certain requests — like the image’s size, its rotation, even whether it should be displayed in color or black-and-white — can be encoded in the URL itself. Booksnake leverages this affordance to request images that are sufficiently detailed for virtual object creation, which sometimes means requesting images larger than what the institution serves by default. For example, Library of Congress typically serves images that are 25% of the original size, but Booksnake modifies the IIIF image URL to request images at 50% of original size. Our testing indicates that this level of resolution produces sufficiently detailed virtual objects, without visible pixelation, for Library of Congress collections.57 We anticipate customizing this value for other institutions. Having downloaded the item’s metadata and digital images, Booksnake can now create a virtual object replicating the physical original.

Our initial goal was for Booksnake to display any image resource available through IIIF, but we quickly discovered that differences in how institutions record dimensional metadata meant that we would have to adapt Booksnake to different digitized collections. To produce size-accurate virtual objects, Booksnake requires either an item’s physical dimensions in a computer-readable format, or _both _ the item’s pixel dimensions and its digitization resolution, from which it can calculate the item’s physical dimensions (see figures 4a and 4b). Institutions generally take one of three approaches to providing dimensional metadata.

The simplest approach is to list an item’s physical dimensions as metadata in its IIIF manifest. Some archives, such as the David Rumsey Map Collection, provide separate fields for an item’s height and width, each labeled with units of measure. This formatting provides the item’s dimensions in a computer-readable format, making it straightforward to create a virtual object of the appropriate size. Alternatively, an institution may use the IIIF Physical Dimension service, an optional service that provides the scale relationship between an item’s physical and pixel dimensions, along with the physical units of measure 58. But we are unaware of any institution that has implemented this service for its collections.59

A more common approach is to provide an item’s physical dimensions in a format that is not immediately computer-readable. The Huntington Digital Library, for example, typically lists an item’s dimensions as part of a text string in the physical description field. This c.1921 steamship poster, for example, is described as: “Print ; image 60.4 x 55 cm (23 3/4 x 21 5/8 in.) ; overall 93.2 x 61 cm (36 11/16 x 24 in.)” [spacing sic ]. To interpret this text string and convert it into numerical dimensions, a computer program like Booksnake requires additional guidance. Which set of dimensions to use, image or overall? Which units of measure, centimeters or inches? And what if the string includes additional descriptors, such as folded or unframed? We are currently collaborating with the Huntington to develop methods to parse dimensional metadata from textual descriptions, with extensibility to other institutions and collections.

Finally, an institution may not provide any dimensional metadata in its IIIF manifests. This is the case with the Library of Congress (LOC), which lists physical dimensions, where available, in an item’s catalog record, but does not provide this information in the item’s IIIF manifest.60 This presented us with a problem: How to create dimensionally-accurate virtual objects without the item’s physical dimensions? After much research and troubleshooting, we hit upon a solution. We initially dismissed pixel dimensions as a source of dimensional metadata because there is no consistent relationship between physical and pixel dimensions. And yet, during early-stage testing, Booksnake consistently created life-size virtual maps from LOC, even as items in other LOC collections resulted in virtual objects with wildly incorrect sizing. This meant there was a relationship, at least for one collection — we just had to find it.

LOC digitizes items to FADGI standards, which specify a digitization resolution for different item types. For example, FADGI standards specify a target resolution of 600 ppi for prints and photographs, and 400 ppi for books.61 We then discovered that LOC scales down item images in some collections. (For example, map images are scaled down by a factor of four.) We then combined digitization resolution and scaling factors for each item type into a pre-coded reference table. When Booksnake imports an LOC item, it consults the item’s IIIF manifest to determine the item type, then consults the reference table to determine the appropriate factor for converting the item’s pixel dimensions to physical dimensions. This solution is similar to a client-side version of the IIIF Physical Dimension service, customized to LOC’s digital collections.

As this discussion suggests, determining the physical dimensions of a digitized item is a seemingly simple problem that can quickly become complicated. Developing robust methods for parsing different types of dimensional metadata is a key research area because these methods will allow us to expand the range of institutions and materials with which Booksnake is compatible. While IIIF makes it straightforward to access digitized materials held by different institutions, the differences in how each institution presents dimensional metadata mean that we will currently have to adapt Booksnake to use each institution’s metadata schema.62

Booksnake then uses this information to create virtual objects on demand. When a user taps View in Your Space to initiate an AR session, Booksnake uses RealityKit to transform the item’s digital image into a custom virtual object suitable for interaction in physical space. First, Booksnake creates a blank two-dimensional virtual plane sized to match the item’s physical dimensions. Next, Booksnake applies the downloaded image to this virtual plane as a texture, scaling the image to match the size of the plane. This results in a custom virtual object that matches the original item’s physical dimensions, proportions, and appearance. This process is invisible to the user — Booksnake just works. This process is straightforward for flat digitized items like maps or posters, which are typically digitized from a single perspective, of their recto (front) side.

Booksnake’s virtual object creation process is more complex for compound objects, which have multiple images linked to a single item record. Compound objects can include books, issues of periodicals or newspapers, diaries, scrapbooks, photo albums, and postcards. The simplest compound objects, such as postcards, have two images showing the object’s recto (front) and verso (back) sides. Nineteenth-century newspapers may have four or eight pages, while books may run into hundreds of pages, with each page typically captured and stored as a single image.

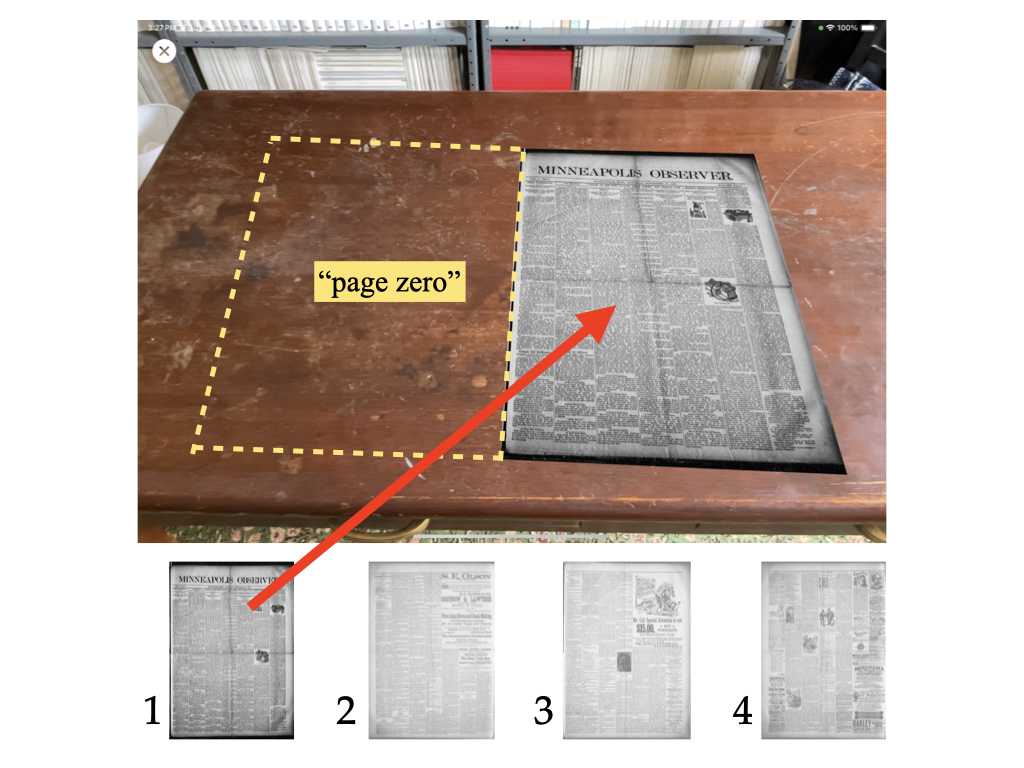

This and the following figures illustrate how Booksnake constructs virtual compound objects from multiple individual virtual objects. In each figure, the top image shows an annotated screenshot of the live camera view, and the bottom images show what images are stored in device memory. First, Booksnake converts the item’s first image into a virtual object (indicated with the red arrow), and creates a matching blank (untextured) virtual object, which acts as an invisible “page zero” (indicated with the yellow dashed line). Booksnake places the invisible “page zero” object to the left of the page one object, and links the two objects, as if along a central spine.

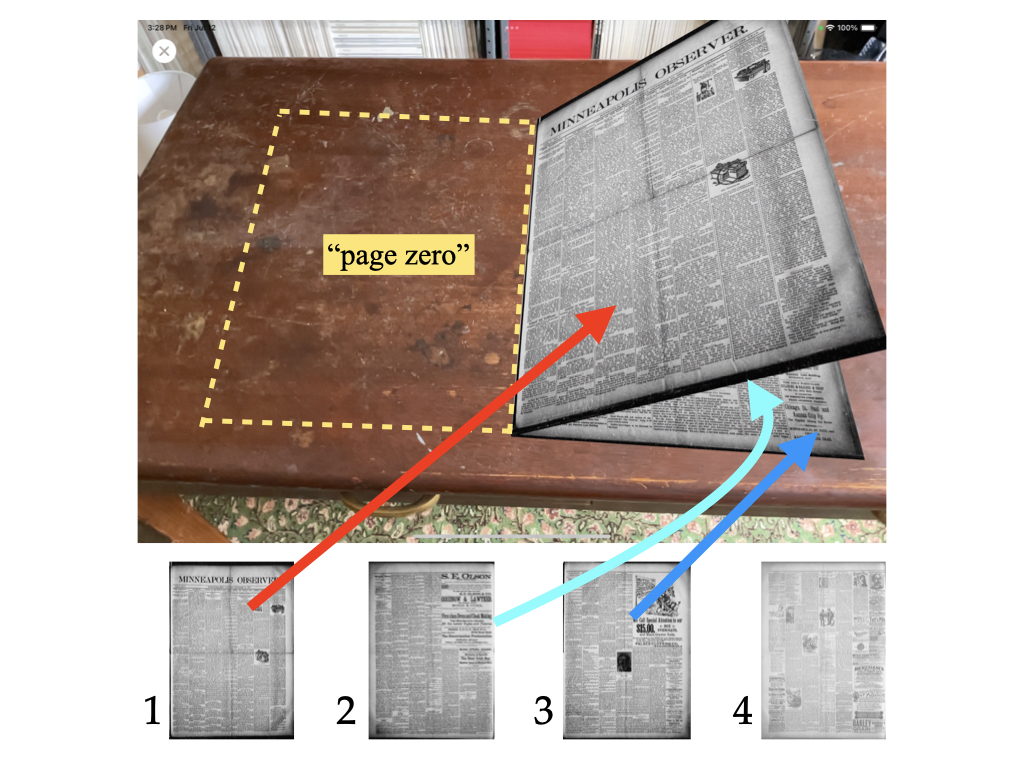

When a user turns the page of a virtual compound object, Booksnake loads the next two item images and converts them into individual virtual objects. Booksnake aligns the page two object (indicated with the light blue arrow) on the reverse side of the page one object (indicated with the red arrow) and positions the page three object (indicated with the dark blue arrow) directly beneath the page one object. Booksnake then animates a page turn by rotating pages one and two together, over one hundred and eighty degrees.

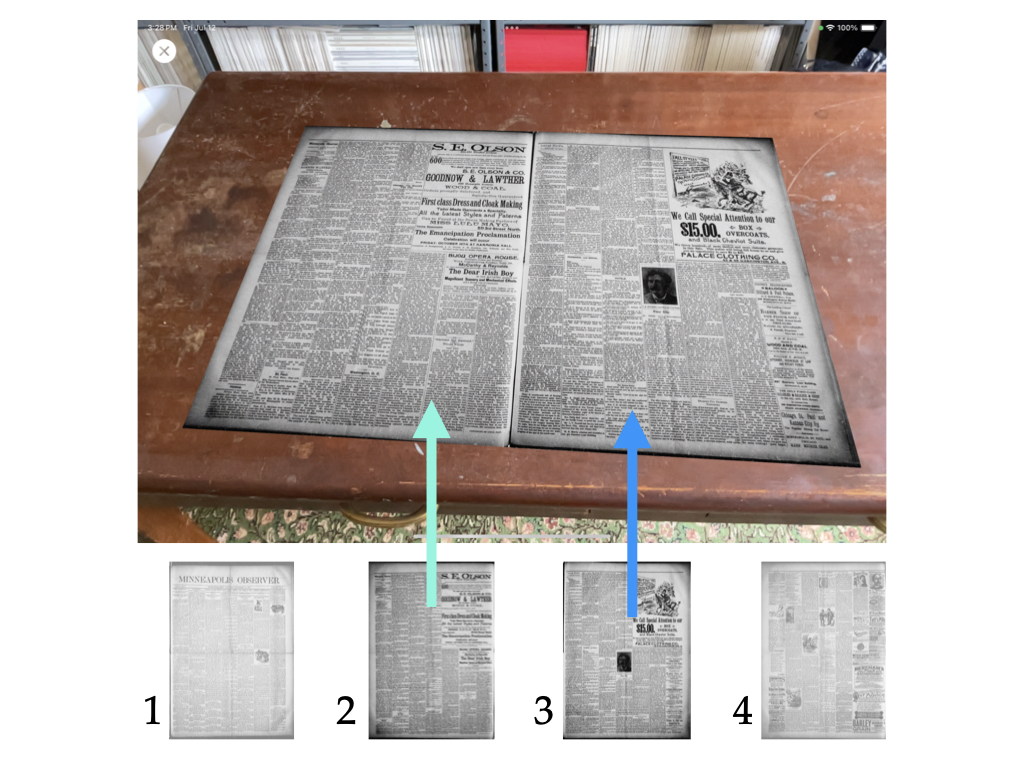

When the animation is complete, Booksnake has replaced the original virtual objects representing pages zero and one with the new virtual objects representing pages two and three (indicated with the light blue and dark blue arrows, respectively), and discards the virtual objects representing page zero and page one. This on-demand virtual object creation process optimizes for memory and performance.

Booksnake handles compound objects by creating multiple individual virtual objects, one for each item image, then arranging and animating these objects to support the illusion of a cohesive compound object. Our initial implementation is modeled on a generic paginated codex, with multiple pages around a central spine. As with flat objects, this creation process happens on demand, when a user starts an AR session. Booksnake uses a compound object’s first image as the virtual object’s cover or first page (more on this below). Booksnake creates a virtual object for this first image, then creates a matching invisible virtual object, which acts as an invisible page zero (see figure 5). The user sees the first page of a newspaper, for example, sitting and waiting to be opened. When the user swipes from right to left across the object edge to “turn” the virtual page, Booksnake retrieves the next two images, transforms them into virtual objects representing pages two and three, then animates a page turn with the object’s spine serving as the rotation axis (see figure 6). Once the animation is complete, pages two and three have replaced the invisible page zero and page one, and Booksnake discards those virtual objects (see figure 7). This on-demand process means that Booksnake only needs to load a maximum of four images into AR space, optimizing for memory and performance. By using a swipe gesture to turn pages, Booksnake leverages a navigation affordance with which users are already familiar from interactions with physical paginated items, supporting immersion and engagement. The page-turn animation, paired with a page-turn sound effect, further enhances the realism of the virtual experience.

A key limitation in our initial approach to creating virtual paginated objects is that our generic codex model is based on one particular type of object, the newspaper. Specifically, we used LOC’s Chronicling America collection of historical newspapers as a testbed to develop the pipeline for creating virtual paginated objects, as well as the user interface and methods for interacting with virtual paginated objects in physical space. While the newspaper is broadly representative of the codex form’s physical features and affordances, making it readily extensible to other paginated media, there are important differences in how different media are digitized and presented in online collections. For example, while Chronicling America newspapers have one page per image, some digitized books held by LOC have two pages per image. We have adapted Booksnake’s object creation pipeline to identify double-page images, split the image in half, and wrap each resulting image onto individual facing pages.63 There are also important cultural differences in codex interaction methods: We plan to further extend the capabilities of this model by building support for IIIF’s “right-to-left” reading direction flag, which will enable Booksnake to correctly display paginated materials in languages like Arabic, Chinese, Hebrew, and Japanese.

A further limitation of our initial approach is our assumption that all compound objects represent paginated material in codex form, which is not the case. Booksnake cannot yet realistically display items like scrolls or Mesoamerican screenfold books (which are often digitized by page, but differ in physical construction and interaction methods from Western books). In other cases, a compound object comprises a collection of individual items that are stored together but not physically bound to each other. One example is “Polish Declarations of Admiration and Friendship for the United States” , held by LOC, which consists of 181 unbound sheets. Or an institution may use a compound object to store multiple different images of a single object. For example, the Yale Center for British Art (YCBA) often provides several images for paintings in its collections, as with the 1769 George Stubbs painting “Water Spaniel” . YCBA provides four images: of the framed painting, the unframed painting, the unframed image cropped to show just the canvas, and the framed painting’s verso side. We plan to continue refining Booksnake’s compound object support by using collection- and item-level metadata to differentiate between paginated and non-paginated compound objects, in order to realistically display different types of materials.

Having constructed a custom virtual object, Booksnake next opens the live camera view so that the user can interact with the object in physical space. As the user moves their device to scan their surroundings, Booksnake overlays a transparent image of the object, outlined in red, over horizontal and vertical surfaces, giving the user a preview of how the object will fit in their space. Tapping the transparent preview anchors the object, which turns opaque, to a physical surface. As the user and device move, Booksnake uses camera and sensor data to maintain the object’s relative location in physical space, opening the object to embodied exploration. The user can crouch down and peer at the object from the edge of the table, pan across the object’s surface, or zoom into the key details by moving closer to the object. The user can also enlarge or shrink an object by using the familiar pinch-to-zoom gesture—although in this case, the user is not zooming in or out, but re-scaling the virtual object itself. The object remains in place even when out of view of the device: A user can walk away from an anchored object, then turn back to look at it from across the room.

Again, an object viewed with Booksnake is just as mediated as one viewed in a traditional Web-based viewer. Throughout the development process, we have repeatedly faced interpretive questions at the intersection of technology and the humanities. One early question, for example: Should users be allowed to re-scale objects? The earliest versions of Booksnake lacked this affordance, to emphasize that a given virtual object was presented at life size. But one of our advisory board members, Philip J. Ethington, argued that digital technology is powerful because it can enable interactions that aren’t possible in the real world. And so we built a way for users to re-scale objects by pinching them, while also displaying a changing percentage indicator to show users how much larger or smaller the virtual object is when compared to the original. In approaching this and similar questions, our goal is for virtual objects to behave in realistic and familiar ways, to closely mimic the experience of embodied interaction with physical materials.

CONCLUSION

“What kind of interface exists after the screen goes away?” asks Johanna Drucker. “I touch the surface of my desk and it opens to the library of the world? My walls are display points, capable of offering the inventory of masterworks from the world’s museums and collections into view?” 64. These questions point toward spatial interfaces, and this is what Booksnake is building toward. Futurists and science-fiction authors have long positioned virtual reality as a means of transporting users away from their day-to-day humdrum reality into persistent interactive three-dimensional virtual worlds, sometimes called the metaverse 65, 66, 67, 68. Booksnake takes a different approach, bringing life-size virtual representations of real objects into a user’s physical surroundings for embodied interaction. The recent emergence of mixed-reality headsets, such as the Apple Vision Pro, only underscores the potential of spatial computing for humanities work, especially for affordances like hands-on interaction with virtual objects.

The first version of Booksnake represents a proof of concept, both of the pipeline to transform digitized items into virtual objects and of AR as an interface for research, teaching, and learning with digitized archival materials. In addition to researching development of an Android version, our next steps are to refine Booksnake’s object creation pipeline and AR interface. One major research focus is improving how Booksnake identifies, ingests, and interprets different types of dimensional metadata, to support as many different metadata schemas and existing online collections as possible. Another research focus is expanding the range of interactions possible with virtual objects — for example, making it possible for users to annotate a virtual object with text or graphics, or to adjust the transparency of a virtual object (to better support comparison between virtual and physical items). In the longer term, once the IIIF Consortium finalizes protocols for 3D archival data and metadata 69, we anticipate that future versions of Booksnake will be able to download and display 3D objects. Ongoing user testing and feedback will further inform Booksnake’s continuing development.

Our technical work enables Booksnake users to bring digitized cultural heritage materials out of flat screens and onto their desks and walls, using a tool — the consumer smartphone or tablet — that they already own. Meanwhile, our conceptual work to construct size-accurate virtual objects from existing images and available metadata will help to make a large, culturally significant, and ever-expanding body of digitized materials available for use in other immersive technology projects where the dimensional accuracy of virtual objects is important, such as virtual museum exhibits or manuscript viewers. To put it another way, it’s taken the better part of three decades to digitize millions of cultural heritage materials — and that’s barely the tip of the iceberg. How much more time will it take virtualize these cultural heritage materials, that is, to create accurate virtual replicas? Booksnake’s automatic transformation method offers a way to repurpose existing resources toward this goal.

We’re building Booksnake to enable digital accessibility and connect more people with primary sources. At its core, Booksnake is very simple. It is a tool for transforming digital images of cultural heritage materials into life-size virtual objects. In doing so, Booksnake leverages augmented reality as a means of interacting with archival materials that is radically new, but that feels deeply familiar. Booksnake is a way to virtualize the reading room or museum experience — and thereby democratize it, making embodied interaction with cultural heritage materials in physical space more accessible to more people in more places.

Falbo, Bianca. (2000) “Teaching from the Archives” , RBM: A Journal of Rare Books, Manuscripts, and Cultural Heritage , 1(1), pp. 33–35. Available at: https://doi.org/10.5860/rbm.1.1.173. ↩︎

Schmiesing, A., and Hollis, D. (2002) “The Role of Special Collections Departments in Humanities Undergraduate and Graduate Teaching: A Case Study,” Libraries and the Academy 2(3), pp. 465–480. Available at: https://doi.org/10.1353/pla.2002.0065. ↩︎

Toner, Carol. (1993) “Teaching Students to be Historians: Suggestions for an Undergraduate Research Seminar,” The History Teacher 27(1), pp. 37–51. Available at: https://doi.org/10.2307/494330. ↩︎

Rosenzweig, Roy. (2003) “Scarcity or Abundance? Preserving the Past in a Digital Era,” The American Historical Review 108 (3), pp. 735–762. Available at: https://doi.org/10.1086/ahr/108.3.735. ↩︎

Solberg, Janine. (2012) “Googling the Archive: Digital Tools and the Practice of History.” Advances in the History of Rhetoric 15(1), pp. 53–76. Available at: https://doi.org/10.1080/15362426.2012.657052. ↩︎

Ramsay, Stephen. (2014) “The Hermeneutics of Screwing Around; or What You Do With a Million Books” in Kee, Kevin (ed.) Pastplay: Teaching and Learning History with Technology . Ann Arbor: University of Michigan Press, pp. 111–120. Available at: https://doi.org/10.1353/book.29517. ↩︎

Putnam, Lara. (2016) “The Transnational and the Text-Searchable: Digitized Sources and the Shadows They Cast,” The American Historical Review , 121(2), pp. 377–402. Available at: https://doi.org/10.1093/ahr/121.2.377. ↩︎

Azuma, Ronald, et al. (2001) “Recent advances in augmented reality” , IEEE Computer Graphics and Applications , 21(6), 34–47. Available at: https://doi.org/10.1109/38.963459. ↩︎

Cramer, Tom. (2011) “The International Image Interoperability Framework (IIIF): Laying the Foundation for Common Services, Integrated Resources and a Marketplace of Tools for Scholars Worldwide” , Coalition for Networked Information Fall 2011 Membership Meeting, Arlington, Virginia, December 12–13. Available at: https://www.cni.org/topics/information-access-retrieval/international-image-interoperability-framework. ↩︎

Cramer, Tom. (2015) “IIIF Consortium Formed” [Online]. International Image Interoperability Framework. Available at: https://iiif.io/news/2015/06/17/iiif-consortium/. ↩︎

Snydman, S., Sanderson, R., & Cramer, T. (2015) “The International Image Interoperability Framework (IIIF): A community & technology approach for web-based images.” In D. Walls (Ed), Archiving 2015: Final Program and Proceedings (pp. 16–21). Society for Imaging Science and Technology. Available at: https://stacks.stanford.edu/file/druid:df650pk4327/2015ARCHIVING_IIIF.pdf ↩︎

Chandler, Tom, et al. (2017) “A New Model of Angkor Wat: Simulated Reconstruction as a Methodology for Analysis and Public Engagement” , Australian and New Zealand Journal of Art , 17(2), pp. 182–194. Available at: https://doi.org/10.1080/14434318.2017.1450063. ↩︎

Shemek, D., et al. (2018) “Renaissance Remix. Isabella d’Este: Virtual Studiolo,” Digital Humanties Quarterly , 12(4). Available at: http://www.digitalhumanities.org/dhq/vol/12/4/000400/000400.html. ↩︎

Roth, A., and Fisher, C. (2019) “Building Augmented Reality Freedom Stories: A Critical Reflection,” in Kee, Kevin, and Compeau, Timothy (eds.) Seeing the Past with Computers: Experiments with Augmented Reality and Computer Vision for History. Ann Arbor: University of Michigan Press, pp. 137–157. Available at: https://www.jstor.org/stable/j.ctvnjbdr0.11 ↩︎

Herron, Thomas. (2020) Recreating Spenser: The Irish Castle of an English Poet . Greenville, N.C.: Author. ↩︎

François, Paul, et al. (2021) “Virtual reality as a versatile tool for research, dissemination and mediation in the humanities,” Virtual Archaeology Review 12(25), pp. 1–15. Available at: https://doi.org/10.4995/var.2021.14880. ↩︎

Sean Fraga researched and designed the project’s technical architecture and user interface affordances; Fraga also prepared the article manuscript. Christy Ye developed a method for transforming digital images into virtual objects. Samir Ghosh ideated and refined Booksnake’s UI/UX fundamentals. Henry Huang built a means of organizing and persistently storing item metadata and digital images using Apple’s Core Data framework. Fraga developed and Huang implemented a method for creating size-accurate virtual objects from Library of Congress metadata. Fraga, Ye, and Huang jointly designed support for compound objects, and Ye and Huang collaborated to implement support for compound objects; this work was supported by an NEH Digital Humanities Advancement Grant (HAA-287859-22). Zack Sai is building support for the new IIIF v3.0 APIs. Michael Hughes is expanding the range of interactions possible with virtual objects in AR. Siyu (April) Yao is building technical links to additional digitized collections. Additionally, Peter Mancall serves as senior advisor to the project. Curtis Fletcher provides strategic guidance, and Mats Borges provides guidance on user testing and feedback. ↩︎

Fisher, Adam. (2018) Valley of Genius: The Uncensored History of Silicon Valley . New York: Twelve. ↩︎

Nolan, Maura. (2013) “Medieval Habit, Modern Sensation: Reading Manuscripts in the Digital Age,” The Chaucer Review , 47(4), pp. 465–476. Available at: https://doi.org/10.5325/chaucerrev.47.4.0465. ↩︎

Szpiech, Ryan. (2014) “Cracking the Code: Reflections on Manuscripts in the Age of Digital Books.” Digital Philology: A Journal of Medieval Cultures 3(1), pp. 75–100. Available at: https://doi.org/10.1353/dph.2014.0010. ↩︎

Kropf, Evyn. (2017) “Will that Surrogate Do?: Reflections on Material Manuscript Literacy in the Digital Environment from Islamic Manuscripts at the University of Michigan Library,” Manuscript Studies , 1(1), pp. 52–70. Available at: https://doi.org/10.1353/mns.2016.0007. ↩︎

Almas, Bridget, et al. (2018) “Manuscript Study in Digital Spaces: The State of the Field and New Ways Forward” , Digital Humanities Quarterly , 12(2). Available at: http://digitalhumanities.org:8081/dhq/vol/12/2/000374/000374.html. ↩︎

Porter, Dot. (2018) “Zombie Manuscripts: Digital Facsimiles in the Uncanny Valley” . Presentation at the International Congress on Medieval Studies, Western Michigan University, Kalamazoo, Michigan, 12 May 2018. Available at: https://www.dotporterdigital.org/zombie-manuscripts-digital-facsimiles-in-the-uncanny-valley/ ↩︎

van Zundert, Joris. (2018) “On Not Writing a Review about Mirador: Mirador, IIIF, and the Epistemological Gains of Distributed Digital Scholarly Resources.” Digital Medievalist 11(1). Available at: http://doi.org/10.16995/dm.78. ↩︎

van Lit, L. W. C. (2020) Among Digitized Manuscripts: Philology, Codicology, Paleography in a Digital World . Leiden: Brill. ↩︎

We focus here on the decisions involved in digitizing an item itself, rather than the decisions about what items get digitized and why, which also, of course, inform the development and structure of a given digitized collection. ↩︎

Drucker, Johanna. (2013) “Is There a ‘Digital’ Art History?” , Visual Resources , 29(1-2). Available at: https://doi.org/10.1080/01973762.2013.761106. ↩︎ ↩︎

Federal Agency Digitization Guidelines Initiative. (2023) Technical Guidelines for Digitizing Cultural Heritage Materials: Third Edition . [Washington, DC: Federal Agency Digitization Guidelines Initiative.] Available at: https://www.digitizationguidelines.gov/guidelines/digitize-technical.html. ↩︎

Haraway, Donna. (1988) “Situated Knowledges: The Science Question in Feminism and the Privilege of Partial Perspective,” _Feminist Studies _ 14(3), pp. 575–599. Available at: https://www.jstor.org/stable/3178066. ↩︎ ↩︎ ↩︎ ↩︎

Project Mirador. (N.d.) Mirador . Available at: https://projectmirador.org/. ↩︎

OpenSeadragon. (N.d.) OpenSeadragon . Available at: http://openseadragon.github.io/. ↩︎

Sundar, S., Bellur, Saraswathi, Oh, Jeeyun, Xu, Qian, & Jia, Haiyan. (2013) “User Experience of On-Screen Interaction Techniques: An Experimental Investigation of Clicking, Sliding, Zooming, Hovering, Dragging, and Flipping.” Human–Computer Interaction 29 (2), pp. 109–152. doi:10.1080/07370024.2013.789347 ↩︎

Crane, Tom. (2021) “On being the right size” [Online]. Canvas Panel. Available at: https://canvas-panel.digirati.com/developer-stories/rightsize.html. ↩︎

D’Ignazio, Catherine, and Klein, Lauren F. (2020) Data Feminism . Cambridge, Mass.: The MIT Press. Available at: https://data-feminism.mitpress.mit.edu/. ↩︎

Drucker, Johanna. (2011) “Humanities Approaches to Graphical Display” , Digital Humanities Quarterly , 5(1). Available at: https://www.digitalhumanities.org/dhq/vol/5/1/000091/000091.html. ↩︎

Kai-Kee, E., Latina, L., and Sadoyan, L. (2020) Activity-based Teaching in the Art Museum: Movement, Embodiment, Emotion . Los Angeles: Getty Museum. ↩︎

Kenderdine, Sarah, and Yip, Andrew (2019). “The Proliferation of Aura: Facsimiles, Authenticity and Digital Objects” in Drtoner, K., et al. (eds.) The Routledge Handbook of Museums, Media and Communication . London and New York: Routledge, pp. 274–289. Available at: https://www.taylorfrancis.com/chapters/oa-edit/10.4324/9781315560168-23/proliferation-aura-sarah-kenderdine-andrew-yip ↩︎

Bacca, Jorge, et al. (2014) “Augmented Reality Trends in Education: A Systematic Review of Research and Applications” , Educational Technology & Society , 17(4), pp. 133–149. Available at: https://www.jstor.org/stable/jeductechsoci.17.4.133. ↩︎

Bailenson, Jeremy. (2018) Experience on Demand: What Virtual Reality is, How it Works, and What it Can Do . New York: W. W. Norton & Co. ↩︎

Luo, Michelle. (2019) “Explore art and culture through a new lens [Blog]” The Keyword . Available at: https://blog.google/outreach-initiatives/arts-culture/explore-art-and-culture-through-new-lens/ ↩︎

Google. (N.d.) Add items [Online]. Google Arts & Culture Platform Help. Available at: https://support.google.com/culturalinstitute/partners/answer/4365018?hl=en&ref_topic=6056759&sjid=9709484027047671346-NA ↩︎

Haynes, Ronald. (2018) “Eye of the Veholder: AR Extending and Blending of Museum Objects and Virtual Collections” in Jung, T., and tom Dieck, M. C. (eds.) Augmented Reality and Virtual Reality: Empowering Human, Place and Business. Cham, Switzerland: Springer, pp. 79–91. Available at: https://doi.org/10.1007/978-3-319-64027-3_6. ↩︎

Haynes, Ronald.(2019) “To Have and Vehold: Marrying Museum Objects and Virtual Collections via AR” in tom Dieck, M. C., and Jung, T. (eds.) Augmented Reality and Virtual Reality: The Power of AR and VR for Business. Cham, Switzerland: Springer, pp. 191–202. Available at: https://doi.org/10.1007/978-3-030-06246-0_14. ↩︎

Cool, Matt. (2022) “5 Inspiring Galleries Built with Hubs” [Blog]. Mozilla Hubs Creator Labs. Available at: https://hubs.mozilla.com/labs/5-incredible-art-galleries/. ↩︎

Bifrost Consulting Group. (2023) “Let’s build cultural spaces in the metaverse” . Available at: https://diomira.ca/. ↩︎

Greengard, Samuel. (2019) Virtual Reality . Cambridge, Mass.: The MIT Press. ↩︎

Pew Research Center. (2021) Mobile Fact Sheet. Available at: http://pewrsr.ch/2ik6Ux9 ↩︎

Atske, Sara, and Perrin, Andrew. (2021) “Home broadband adoption, computer ownership vary by race, ethnicity in the U.S.” Pew Research Center. Available at: https://pewrsr.ch/3Bdn6tW. ↩︎

Vogels, Emily A. (2021) “Digital divide persists even as Americans with lower incomes make gains in tech adoption” Pew Research Center . Available at: https://pewrsr.ch/2TRM7cP ↩︎

The first version of Booksnake supports the IIIF v2.1 APIs. We are currently building support for the new IIIF v3.0 APIs. ↩︎

International Image Interoperability Framework. (N.d.) Consortium Members [Online]. Available at: https://iiif.io/community/consortium/members/. ↩︎

OCLC. (N.d.) CONTENTdm and the International Image Interoperability Framework (IIIF) [Online]. Available at: https://www.oclc.org/en/contentdm/iiif.html. ↩︎

Luna Imaging. (2018) LUNA and IIIF [Online]. Available at: http://www.lunaimaging.com/iiif. ↩︎

orangelogic. (N.d.) Connect to your favorite systems: Get DAM integrations that fit your existing workflows [Online]. Available at: https://www.orangelogic.com/features/integrations. ↩︎

Apple. (N.d.) ARKit [Online]. Apple Developer. Available at: https://developer.apple.com/documentation/arkit/. ↩︎

Apple. (N.d.) RealityKit [Online]. Apple Developer. Available at: https://developer.apple.com/documentation/realitykit/. ↩︎

To avoid pixelated virtual objects, the host institution must serve through IIIF a sufficiently high-resolution digital image. ↩︎

International Image Interoperability Framework. (2015) “Physical Dimensions” in Albritton, Benjamin, et al. (eds.) _Linking to External Services _ [Online]. Available at: https://iiif.io/api/annex/services/#physical-dimensions. ↩︎

David Newberry’s “IIIF for Dolls” web tool makes inventive use of the IIIF Physical Dimension service to rescale digitized materials 70. ↩︎

Library of Congress uses MARC data to construct IIIF manifests, but this is sometimes a lossy process 71. ↩︎

Both ppi requirements are for FADGI’s highest performance level, 4 Star. ↩︎

More information about Booksnake’s metadata requirements is available at https://booksnake.app/glam/. ↩︎

Another example: For some digitized books held by LOC, the first image is of the book spine, not the book cover. Booksnake incorporates logic to identify and ignore book spine images. ↩︎

Drucker, Johanna. (2014) Graphesis: Visual Forms of Knowledge Production . Cambridge, Mass.: Harvard University Press. ↩︎

Gibson, William. (1984) _Neuromancer. _ Reprint, New York: Ace, 2018. ↩︎

Stephenson, Neal. (1992) Snow Crash . Reprint, New York: Bantam, 2000. ↩︎

Cline, Ernest. (2011) Ready Player One . New York: Broadway Books. ↩︎

Ball, Matthew. (2022) The Metaverse: And How It Will Revolutionize Everything . New York: Liveright. ↩︎

IIIF Consortium staff. (2022) New 3D Technical Specification Group [Online]. Available at: https://iiif.io/news/2022/01/11/new-3d-tsg/. ↩︎

Newberry, David. (2023) IIIF for Dolls [Online]. Available at: https://iiif-for-dolls.davidnewbury.com/. ↩︎

Woodward, Dave. (2021) Email to Sean Fraga, February 2.

[^]: ↩︎