One of the projects I’ve had the privilege of working on over the last year is Readux [source code on GitHub], which aims to make Emory Libraries digitized books discoverable by collection and available for research, scholarship, and teaching. This work has been all the more gratifying because I worked on an earlier version of the project that sadly never went into production (see more on the project history).

One of the interesting technical challenges has been making both page

images and text available and useful. There are plenty of “page-turner”

or “book reader” applications (e.g., Internet Archive

BookReader, or the

IIIF

viewer Mirador), but it inevitably it

seems they are written in a different progamming language, or difficult

to adapt, modify, and integrate into a larger application. The

technologies underlying these applications are all probably pretty

similar: page images, scans or photographs of physical book pages, with

text somewhere that has been generated through OCR (optical character

recognition), usually used for search but not display, since OCR tends

to be unreliable.

In Readux, we want the page content to be annotatable—both the images and the text content—so we went a different route. The OCR text is put on the page, invisibly layered over the image. It’s positioned and sized as close as possible to the original text on the image, based on the information encoded in the OCR. The text is kept invisible, because even for the volumes where OCR works well, there are still enough errors that it’s easier to read the image rather than the OCR (and in some cases, the OCR is really quite terrible and unreadable). Putting the text on the page makes it easy for users to take advantage of web browsers’ normal text functionality, such as the ability to search on the page’s text or highlight it, either to copy it for pasting elsewhere or for annotation.

I recently learned about Project Naptha, a new browser-based tool to identify and expose text within images. The result, when it works well, looks remarkably similar to what we’re doing in Readux—except that, instead of trying to find the text in the image after the fact and on the fly, we’re providing access to the text data that has already been generated.

How it works#

To start with, we wanted the text and position data in a common format. So we wrote [XSLT](https://github.com/emory- libraries/readux/blob/1.8.3/readux/books/ocr_to_teifacsimile.xsl) to convert our OCR XML to [TEI facsimile](http://www.tei-c.org/release/do c/tei-p5-doc/en/html/PH.html#PHFAX). Because it’s intended to document digital images of source materials, TEI facsimile includes tags and attributes that can fully describe the position and size of blocks and text on the page. This maps quite well to the positional data in our OCR. Our digitized volumes already include two major kinds of OCR XML output: two versions of Abbyy OCR, and the newer METS/ALTO. As technologies and standards change, we may eventually have other formats; but as long as we can translate the information to TEI facsimile, we have a common format that we can use.

Blocks of text are absolutely positioned within a container div that is sized based on the page image. The top and left coordinates of the text div, as well as the height and width, are all calculated as percentages of the full page image size (which is also stored in the OCR and TEI facsimile XML). Because we don’t have consistent font family or size data in the OCR, the display font sizes are generated in a similar fashion. A rough, pixel-based font size is calculated based on the scale of the original page image to the display size, but for browsers that support viewport-based font sizes, a fontsize is calculated as a percentage of the image, which is then scaled to the relative viewport size using javascript. In addition, the text spacing is adjusted with custom javascript using CSS word-spacing and letter-spacing to fill the width of the text block: for longer lines of text, this results in a fairly close approximation of where the words are on the page image. Because each section of text is positioned using percentages, the text positions adjust along with the image when it is resized responsively, and the relative font height and text width javascript is set to run again after the window is resized.

Sometimes it’s easier to understand things by looking at examples, so here are some links for sample content in Readux and some of the code I mentioned. If you’re interested in the HTML layout and positioning, use your browser developer tools on some Readux volume pages to inspect some of the text content.

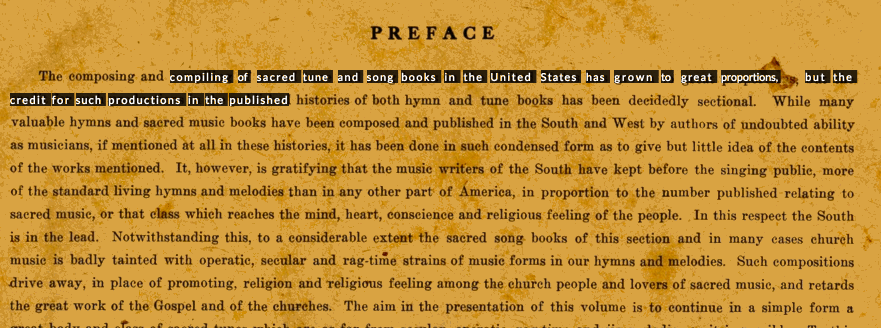

- A [page of music](http://readux.library.emory.edu/books/emory:r8qzb/page

s/emory:r9dn7/) from 1911 Original Sacred Harp.

This volume has one METS/ALTO XML file for each page, with word-level position information.

- METS/ALTO for that page

- TEI facsimile generated from the METS/ALTO

- A page of text from

Ladies First!. This volume has one Abbyy OCR XML for the entire volume, with line-level position information.

- Abbyy OCR for the entire volume

- TEI facsimile generated from the appropriate page of the Abbyy OCR

- Custom Django template tag code that does the majority of the position and size calculations

- Custom Javascript with textwidth and relativeFontHeight functions

Screen captures created with GifGrabber; preview still images extracted using EZGif.