This was an odd mix of presentations (at least, I couldn’t find any common theme), but fortunately for me I was interested in all three of them: an experimental 3D poetry visualization grounded in literary theory; Amy Earhart’s compelling argument for the need to recover early digitization projects that are disappearing or even already gone; and Doug Reside’s discussion of the successes and problems with DH Code Camps.

Violence and the Digital Humanities Text as Pharmakon#

Adam James Bradley (view abstract, video of the presentation)

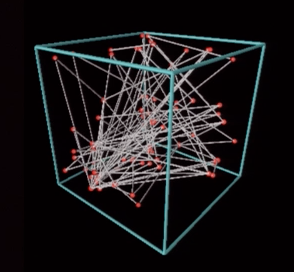

Bradley introduced himself as a student both of literary poetry and of system engineering and design (although he came off a bit pretentious when he called himself “precocious” and described himself as a kind of Janus standing in whatever door or hallway connects the English department with the Systems Engineers - strange given the context of DH, where nearly all of us have interests and expertise in multiple fields like this). Bradley says he wanted to explore the question, “what happens when literary theory is put in contact with technology?” He says that he thinks of poetics as synchronic and likes to approach poetry as “words in space” (noting that after other versions of this presentation attendees have “diagnosed” him with synaesthesia). Bradley then proceeded to show a screenshot of 3D graph of Blake’s “The Tyger”, generated by a system he has been collaborating on to provide a 3D model of poetry, and he discussed this approach as a defamiliarization of the text: it provides structure without words, allowing the user to rotate, zoom, and interact with the 3D graph of the poem. He frames his 3D model using the work of Diderot, describing the interaction between art, the world, and the critic: all matter acts and reacts (“re-acts”), and thus literary criticism is a part of an (or the) act of creation. There are relationships between structure, form and function; human creativity is always a kind of “re-creativity” because it deals with cerated things.

Bradley thinks of his technologically-based visualizations as experimentation based on theory, and thinks that these types of data visualizations can help move us through the three phases of Diderot’s theory. He suggests that visualizations should be interactive, and claims that his 3D model works as a heuristic or a proof-of-concept. He claims that other kinds of visualizations are too complex, or require additional metadata (beyond the text). In particular, he claimed that word clouds such as those produced by Wordle or Voyant aren’t actually useful if you aren’t already familiar with the text (although I wonder if perhaps that only applies to poorly-generated word clouds).

After attempting to ground his work in literary theory, Bradley went into more detail on his model. Each word in the text is mapped into a 3D box, which he describes as an “infinite space for every word in the English language,” and then lines are drawn between the words (presumably based on the order of words in the text); in this case, words are mapped to a base-26 numbering system by letter (or alternatively a base-48 system with letters and punctuation, not differentiating upper and lower case), and coordinates are determined by successive letters within the space. The first three letters of a word correspond to x, y, and z coordinates and any successive letters refine those coordinates; I don’t think he addressed how (or if) they handle words with less than three letters.

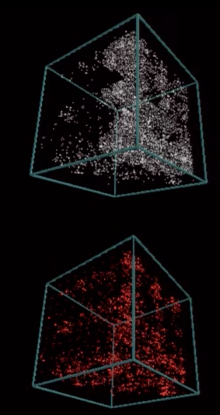

I think Bradley is probably right that we should do a better of job of theorizing and analyzing such things as DH text visualizations (sometimes, perhaps we get distracted by a shiny new tool or pretty output and perhaps don’t consider as deeply the embedded assumptions or value of the result), although I found his “high-theory” approach and even the title of his talk rather off-putting. And in particular, I’m not at all convinced of the model that Bradley and his colleagues have actually come up with– in particular, the mapping into 3D space seems completely arbitrary. The one observation on Blake’s “Tyger” that Bradley provided based on his graph is the centrality of the repeated words “and” and “what”– which doesn’t seem terribly significant or insightful, and also seems like something that would be pretty easy to discover if you just look carefully at the text itself (i.e., by doing a standard close reading). When Bradley showed images of a couple of other graphs, suggesting the limits of his model - a graph of all of Shakespeare’s texts and one of the OED - it became very clear that what he is actually graphing here is the distribution of letters as they are used in the English language. This might be interesting for a few texts (perhaps a poem with a preponderance of ps), but I suspect there are more useful and efficient ways to investigate that, if we even want to. Bradley and some of the commenters after the presentation suggested that there are plenty of other possibilities for mapping words, such as by semantics or by sound; but I think that unless we can rank and graph words on three completely different axes (e.g., attributes like how common a word is in normal usage; or the age of a word, if we can leverage Marc Alexander’s work) rather than arbitrarily segmenting words to create axes, this approach is unlikely to be meaningful.

In his abstract, Bradley tries to differentiate his work from TextArc, but this actually reminds me of TextArc in some ways - it might be pretty (maybe), or interesting, but is it meaningful or useful? I actually made use of TextArc in my dissertation, theorizing it as a kind of “deformative interpretation” (borrowing from Jerome McGann), and found a few interesting insights; I’m not sure I would be able to do the same with Bradley’s 3D models.

Recovering the Recovered Text: Diversity, Canon Building, and Digital Studies#

Amy Earheart (view abstract, video of the presentation)

Amy Earheart had a very engaging, convincing presentation about the need to addressing the diversity of digital canons within the American educational system. She presented a kind of whirl-wind history of text digitization projects as “canon wars”, citing Julia Flanders that the Brown University Women Writers Project was developed partly in response to the Norton Anthology of Literature by Women (e.g., there was not enough coverage of marginal texts, such as pre-1900, African-American, etc). Earheart referenced the early optimism of digital scholarship as both idealistic and activistic, and as the “great hope” that would serve to correct the canon. In particular, the idea that anyone can contribute to the online digital canon is a compelling one, and in addition to scholarly projects there are and were many non-scholarly collections (by libraries and individuals, scholars and fans). A lot of those early projects were very “DIY”: non-scholarly, or not associated with DH centers or libraries, and often HTML rather than database-driven or SGML.

Earheart’s concern is that these old resources are gone in many cases, and not even accessible on the Wayback Machine. We are losing these first-generation recovery projects, and in effect we are returning to the old canon of major authors. And this is especially a concern for texts that are not available in any other way, even in print. Earheart makes the case that we need a targeted recovery, and says that the problem is not technical but social (referencing Matt Kirschenbaum). Earheart discussed the particular example of the Winnifred Eaton Digital Archive, which was created by a scholar when she discovered rare texts in an archive, which she then got permission to digitize and make available online. That content is now being migrated into a larger text archive, but in the process they are dropping some of the content from the original site, including the scholarly introduction that gives the work context.

Earheart also makes some practical suggestions. She says we need to start working on a stop-gap preservation: acquire artifacts, practice small-scale intervention. One commenter suggested that perhaps we can harness existing projects, e.g. merge smaller, relevant sites into larger existing ones. She noted that it is important to begin immediately, as a community, and that we need to do some triage, particularly for content that is not readily available. Earheart also voiced a concern about Digital Humanities scholarship shifting to large scale data work - if the dataset is not good, or incomplete, then our results will be skewed. Another commenter asked if John Unsworth’s notion that “some projects need to die” (so we can learn from them) is relevant here, and Earheart says that this may be the case, but that is is emphatically not okay if that means we lose the content. I remember there was some pretty active conversation about this on Twitter, too - I think it was there that someone noted that Libraries tend to preserve sites and content like this as “dead” but that scholars need it “live”; I’m not sure I agree with that assessment, but it is definitely worth thinking more about the different purposes and assumptions that librarians and scholars have and bring to their digital projects.

This is a really valuable conversation to have, both for the Digital Humanities world and also for Digital Libraries. I think that our text and digitization projects here at Emory Libraries or in the Beck Center are languishing in some ways, even if it is on the smaler scale of not being maintained or updated as we might like due to funding, staffing, and organizational priorities.

Smaller is Smarter: Or, when the Hare does beat the Tortoise#

(previously titled “Code Sprints and Infrastructure”)

Doug Reside (view abstract)

Doug Reside presented on his experience with DH code camps, where they have attempted to do rapid prototyping with a group of scholar-programmers. Examples of this are One Week, One Tool, Interedition,and the MITH XML barn-raising. The idea is tobuild tools that might point towards infrastructure.

Some of the problems they’ve run into include disagreement aboutcode-sharing mechanism, coding languages/dialects (e.g., jQuery vs YUI); lack of focus on documentation; and differing assumptions about goals. Reside pointed out the differencebetween big infrastructure projects and code camps in terms of waterfall vs. agile; I liked his brief characterization of waterall as “plan, then do” vs. agile as “do, learn, do.” Another problem is thatdigital humanists don’t tend to use their own projects (which seems like a pretty big issue when you think about it); the Interedition projects in particular have not been documented or publicized very well.

Another problem with code camps is that employees don’t have time to prepare ahead of time, or continue after the fact (e.g., prepping based on the agreed upon tools, code); this suggests a need for institutional support to allow employees to spend some portion of their time to do this work. Reside suggested that perhaps if a particularinstitution needs a solution, they could start or fund a code camp (sort of like a kickstarter project). This approach has alower overhead, and should provide quick returns on investment; smaller projects may be easier for this, but certain larger projects may lend themselves to being split out across multiple developers, such as the microservices API.

When asked about including non-DH developers, Reside says that is certainly possible, but that it also may require additional prep time. He also pointed out that there is an inherentvalue in getting people together for collaboration, because it fosters community; and that depending on the particular goal, you might need different participants (based on skills or domain). For instance,MITH is experimenting with attaching code sprints to conferences (e.g., DH2013), which should allow for more potential collaborators and consumers, as well as opportunities for feedback.

Since I’m currently employed as a Software Engineer in a Library, I’m particularly interested in the question about including non-DH developers. I have a scholarly background, but most of my colleagues don’t– so they might not fit in the “scholar-programmer” category that Reside is thinking of, but I think the type of work we do here in the Library makes us at least familiar, if not conversant, with the issues scholars at stake, and I think would make us pretty valuable collaborators for this kind of work.