The last time I re-read Jules Verne’s Around the World in 80 Days (sometime this spring, when I made a long trip myself), I was surprised to notice that Verne used a lot of very specific place names in the western United States that I wouldn’t have necessarily expected a 19th century Frenchman to know. Perhaps what caught my eye was the variant spellings of familiar names– amongst such places as Laramie, Salt Lake City, and Omaha, Verne references the “Wahsatch Mountains” and the “Tuilla Valley” (now usually spelled Wasatch and Tooele).

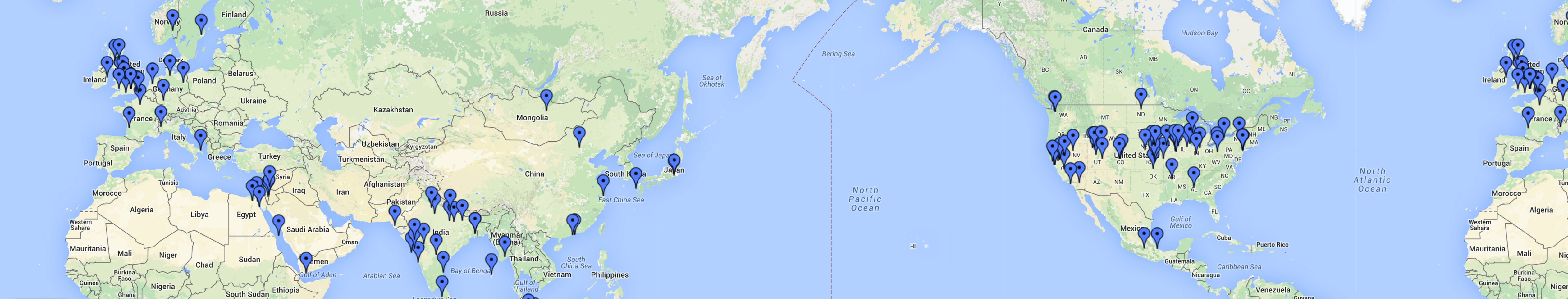

Here is a Google map I’ve created using some of the tools and technologies we’re using for this project (this is a short enough book that it wouldn’t be that difficult to build a detailed map of the trip, but where’s the fun in that?) The points on the map each have a links to the corresponding DBpedia record and snippets of context where the places are mentioned in the text. Below, I’ll explain more about how I created it.

View Places in “Around the World in 80 Days” in a larger map

After I finished reading the book, it occurred to me that currently available technologies should make it pretty easy to extract and map place names from the text, and since geographical location is so significant in the work it might be an interesting experiment. I went looking for maps of Phileas Fogg’s great trip and was surprised to find not much.

Wikipedia map of Phileas Fogg’s trip in Around the World in 80 Days

The wikipedia page for Around the World in 80 Days has a map of the trip, but it’s just an image, and fairly high level. There’s a Map Tales version, TripLine version, and a couple of Google maps versions (here and here), but they are still fairly high-level, and gloss over a lot of the details, which I think is what makes the trip so interesting.

When I started investigating extracting place names and generating a map, my first thought was to try Edina Unlock, which I had heard about but never had the opportunity to work with. However, I wasn’t able to get any results, and it’s not clear to me if the service is still being maintained or supported. Once we started doing development for this project, I figured out that I could use the python scripts we’ve created as part of the “Name Dropper” codebase. I grabbed the text from Project Gutenberg, cleaned it up a little bit and split it up by chapter, and then used the lookup-names script from namedropper-py to generate CSV files of the recognized place names for each chapter. The benefit of using DBpedia and semantic web technologies is that, once resources are identified and linked to a DBpedia resource we have all the other information associated with those items– in this case, latitude and longitude. Using the CSV data and DBpedia, I wrote some simple python code to generate a georss feed that I could import into a Google map. Some of the drawbacks to this approach are that I’m limited to the names that DBpedia Spotlight can identify (and I’m still trying to figure out a good way to filter good answers from bogus ones), and I’m relying on the geo-coordinates that are listed in DBpedia (you may notice on the map above that Oregon is pretty clearly in the wrong place).

For those who are interested, here are the nitty-gritty, step-by-step details of how I went from text to map.

- Downloaded the plain-text version of the novel from Project Gutenberg.

-

Manually removed the Project Gutenberg header and footer from the text, as well as the table of contents.

Note that Around the World in 80 Days is in the Public Domain in the U.S., and according to the Project Gutenberg License, once you have removed the Gutenberg license and any references to Project Gutenberg, what you have left is a public domain ebook, and “you can do anything you want with that.”

- Split the text into individual files by chapter using cplit (a command-line utility that splits a file on a pattern):

csplit -f chapter 80days.txt "/^Chapter/" '{35}'

- Ran the NameDropper lookup-names python script on each chapter file to generate a CSV file of Places for each chapter. (Note that this is C-shell foreach syntax; if you use something else you’ll have to find out the for loop syntax.)

foreach ch ( chapter* )

echo $ch

lookup-names --input text $ch -c 0.1 --types Place --csv $ch.csv

end

-

At this point, I concatenated the individual chapter CSV files into a single CSV file that I could import into Excel, where I spent some time sorting the results by support and similarity scores to try to find some reasonable cut-off values to filter out mis-recognized names without losing too many accurate names that DBpedia Spotlight identified with low certainty. It was helpful to be able to look at the data and get familiar with the results, but I think now I might skip this step.

-

I wrote some python code to iterate over the CVS files, aggregate unique DBpedia URIs, and generate a GeoRSS file that could be imported into Google Maps. It’s not a long script, but it’s too long to include in a blog post, so I’ve created a GitHub gist: csv2georss.py. I experimented with filtering names out based on the DBpedia Spotlight similarity/support scores, but I couldn’t find a setting that omitted bad results without losing a lot of interesting data, and it turned out to be easier to remove places from the final map.

-

Ran the script to generate the GeoRSS:

python csv2georss.py > 80days-georss.xml

-

Made a new Google Map and imported the GeoRSS data. (Login to a google account at maps.google.com, select ‘create map’, ‘import’, and choose the GeoRSS file generated above. A couple of times Google only showed the first name; if that happens I recommend just do the import again, and check the box to replace everything on the map.)

-

Went through the map and removed place names that were mis-recognized from common words based on the context snippets included in the descriptions. For example, I ran into things like Isle of Man for man, Winnipeg for win, Metropolitan Museum of Art for met. Because the script aggregates multiple references to the same place, each mis-recognized name only needed to be removed once. When I ran the lookup-names script with -c 0.1 I only had to remove 5 of these; when I ran it with -c 0.01 I had to remove significantly more (over 30).

ORCID iD

ORCID iD Humanities Commons

Humanities Commons